OCHRE Overview

An overview of the OCHRE database platform employed by the Forum for Digital Culture to support computational research of all kinds through all stages from the initial acquisition of data to its final publication and archiving.By David Schloen and Sandra Schloen (June 2023)

A Computational Platform for All Kinds of Data and All Stages of Research

The Online Cultural and Historical Research Environment (OCHRE) is a tightly integrated suite of computational tools for working with data of all kinds through all stages of a research project. It provides a seamless environment within which it is easy to move from one stage to the next.

OCHRE has been in operation continuously for more than 20 years and is currently used by more than 100 projects in the humanities and social sciences, and also in some natural sciences. It has 10 million indexed database items representing more than 100 terabytes of data, with room for much more. It is a scalable and sustainable platform for research and publication that is maintained by the Forum for Digital Culture in conjunction with the University of Chicago Library.

Click the buttons below for more information on these OCHRE topics:

OCHRE Supports All Stages of Research

There are five stages of computational work in a typical research project. OCHRE has many sophisticated features to make it easy for project teams to accomplish these tasks via an intuitive user interface without having to write their own code or manage individual data files.

1. Acquire the Data

The first stage is to acquire the data for the project by importing existing digital files, fetching data dynamically from online data sources, capturing the output of data-capture devices (e.g., digital cameras, 2D document scanners, 3D laser scanners, etc.), or manually keying in the data.

2. Integrate the Data

The second stage is to integrate data that has been derived from disparate sources and is stored in different digital formats by making the data conform to a coherent project taxonomy that regularizes the names of entities and the relations between them. This can be done while respecting the terminology and conceptual distinctions of each project and without forcing everyone into the same rigid mold.

3. Analyze the Data

The third stage is to explore and analyze the data by means of database queries, statistical analysis, geospatial mapping, and other methods of pattern recognition and data visualization.

4. Publish the Data

The fourth stage is to publish the data on the Web along with the results of data analysis and visualization, i.e., as much of the data as the creator of the data wishes to publish, without assuming that all the data will be made public. Published data should remain permanently accessible online so that scholars and students can easily find it and use it and cite it reliably in their own work, just as they would cite a non-digital printed publication. This has been hard to achieve in digital humanities but is possible via the OCHRE platform.

5. Preserve the Data

The fifth and final stage is to preserve all the data, including both published data and unpublished data, in a standards-compliant fashion so it can be re-used over the long term. University libraries play an important role at this stage by curating digital data and migrating it to new storage media and formats as technologies change.

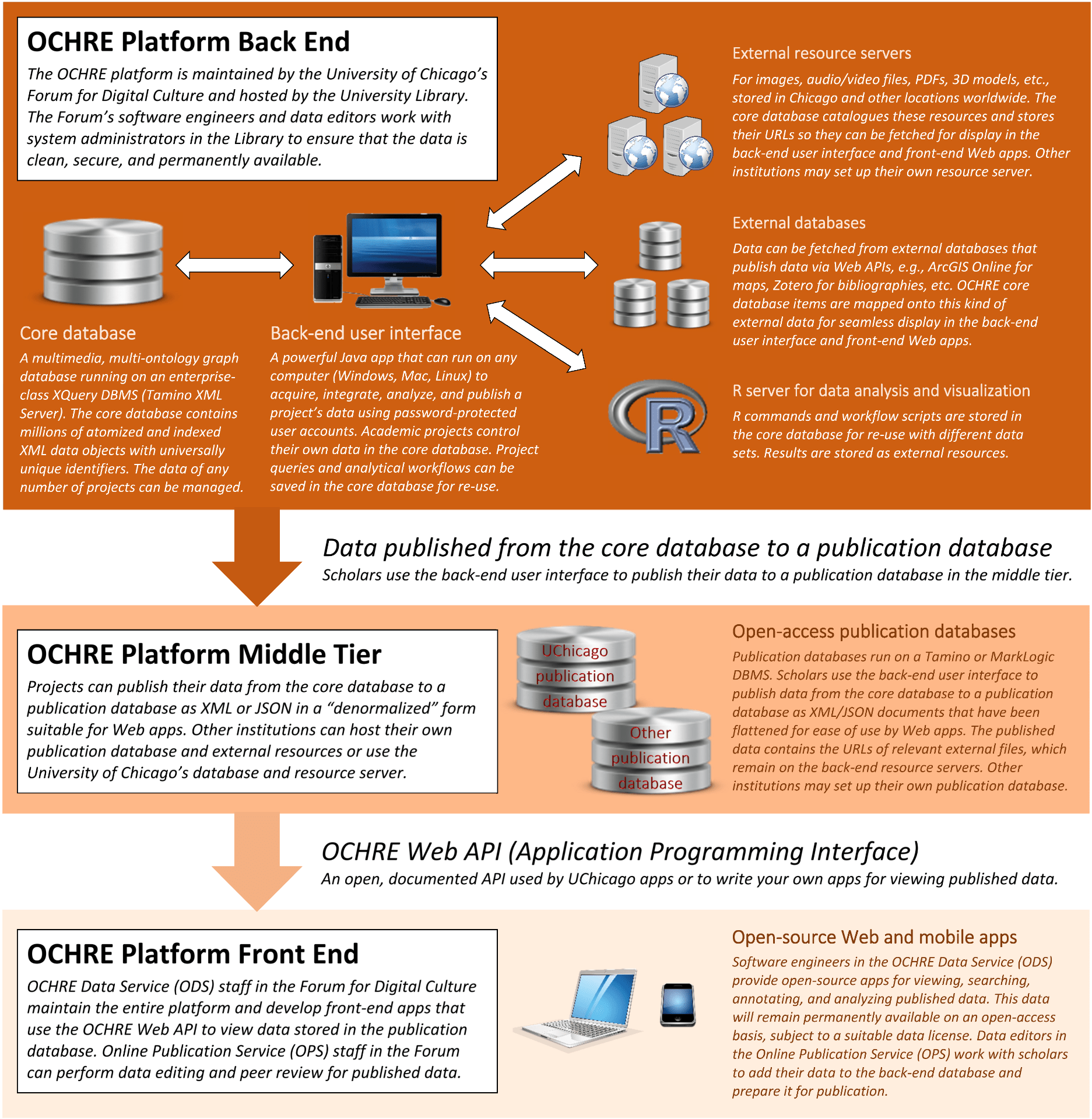

OCHRE Has a Back End and a Front End

The OCHRE platform has several interconnected components to support seamlessly all five stages of research. On the back end of the platform is the core database: a multimedia, multi-ontology, semistructured graph database with an intuitive user interface that scholars use to build content in the core database via password-protected user accounts. The back-end user interface is used by many different academic projects to acquire, integrate, analyze, and publish their data. Only authorized members of a project who have been given a user account by the project director can view and edit the project’s data in the core database.

The front end of the OCHRE platform consists of Web and mobile apps that let anyone view and analyze (but not edit) data that projects have chosen to publish from the core database. The project director decides which, if any, of the project’s data is made public in this way. There is a middle tier between the front end and the back end consisting of open-access publication databases that contain published data in a form that is easy for Web apps to use, i.e., the highly atomized and efficient multi-dimensional graph structure of the core database is exposed in a publication database as flattened (“denormalized”) XML or JSON documents. The front-end apps access the publication databases via a well-documented Web API (Application Programming Interface).

Academic projects use the back-end user interface to acquire data from different sources, in any digital format. Access to the database is controlled via password-protected user accounts given to project members, who log in with varying privileges for viewing and editing their project’s data as specified by the director of the project or a designated project administrator. All the data stored in the core database is entered or imported by individual academic projects and is attributed to and controlled by the project that entered it. After the data has been acquired, the back-end user interface is used to integrate the data in accordance with the project’s own taxonomy and relational structures and then to analyze the data. No one can view or change a project’s data except registered users who have user accounts given to them by the director of the project or a designated project administrator. A project may use the back-end user interface to publish its data. There is no requirement to do so, but whatever has been published will remain permanently accessible and citable on the front end of the platform on an open-access basis, even if new editions of the data are published subsequently. Published data contains unique identifiers and persistent URLs for each piece of information, no matter how small, e.g., each image and map and data record has its own persistent URL. Finally, the director of the project or a designated project administrator may use the back-end user interface to generate RDF triples in order to preserve the project’s data for long-term archival storage outside the OCHRE platform. An RDF archive preserves all the atomized entities and relations stored in the core graph database in an open, standardized format that is compatible with the Semantic Web. An RDF archive of OCHRE data does not depend on the OCHRE software but can be loaded into any database system that supports the RDF standard.

OCHRE Is Comprehensive, Scalable, and Sustainable

OCHRE is a comprehensive computational platform for all stages of research. The alternative is to employ an ad hoc collection of separate software tools for data management, statistics, image processing, geospatial mapping, online publication, and so on. But that approach requires the cumbersome transferring of data from one piece of software to another using intermediate file formats and chunks of code (scripts) that most researchers either do not know how to write or do not want to debug and maintain. The result is a series of time-consuming and error-prone tasks in which it is easy to lose track of the many pieces of information accumulated in a typical project. By contrast, OCHRE users have a comprehensive view of all their data in all stages of the project and an intuitive user interface with which to view, edit, analyze, and publish the data, without having to code their own scripts or manually transfer data from one piece of software to another.

OCHRE is comprehensive because the innovative semistructured graph database used on the back end of the platform is based on a foundational ontology (meta-ontology) that is universal in scope and can accommodate any number of project-specific or domain-specific ontologies while faithfully preserving each project’s own terminology. The database schema that implements this foundational ontology is highly scalable, being able to accommodate any number of research projects with high efficiency. The database runs on Tamino XML Server, an enterprise-class native-XML database management system (DBMS) that provides sophisticated indexing and fast querying of the data using XQuery.

OCHRE is not only comprehensive and scalable but also sustainable because it is institutionally supported and maintained by the University of Chicago’s Forum for Digital Culture (a unit of the Division of the Humanities) in collaboration with the University Library. The Digital Library Development Center of the University Library provides system administration and multi-generation data back-up for the OCHRE core database and for the OCHRE publication database and external resource server maintained by the University of Chicago. Other institutions may choose to host their own publication database and external resource server, although scholars everywhere are free to use the ones hosted by the University of Chicago.

OCHRE has been in operation since 2001. It has been thoroughly tested and enhanced over the years in close consultation with academic researchers in a wide range of fields in the humanities and social sciences, and in some of the natural sciences (e.g., paleontology, population genetics, and astronomy). OCHRE is currently being used by more than 100 projects in the United States, Canada, Europe, and the Middle East. As of June 2023 there were approximately 1,000 user accounts for researchers and their students to add data for their projects. The database contains approximately 10 million indexed items representing over 100 terabytes of data. This scale of usage has enabled rigorous real-world testing of the software for a wide range of use cases and has demonstrated the system’s sustainability over a long period of time. In the future, we expect to add many more projects and users.

OCHRE Is Open and Freely Accessible

In addition to being scalable and sustainable, OCHRE is “open” and accessible in the following ways:

Open Standards

The OCHRE platform is entirely based on non-proprietary “open” standards published by the World Wide Web Consortium (W3C), i.e., XML, XML Schema, XSLT, XQuery, HTML, RDF, and SPARQL, supplemented by JSON, which is an ISO standard, and IIIF, a set of image data standards published by a global consortium of research libraries.

Open Access

All the data that projects choose to publish from the OCHRE core database is available on an open-access basis via Web apps provided by the Forum for Digital Culture, with no paywall, subject to a Creative Commons license that requires non-commercial use with attribution to the creators of the data. Published data is made available in widely supported open-standard data formats (XML and JSON plus IIIF for image data).

Open Source

All the apps provided on the front end of the OCHRE platform are open source. These are JavaScript/HTML/CSS apps for viewing, analyzing, and annotating data published by projects from the core database on the back end of the platform. The back end itself contains a mixture of open-source software combined with proprietary software for which there is no good open-source alternative. This is the normal practice when building enterprise-class database systems with high scalability and high availability.

A word about open source: Open-source software is obviously desirable whenever it is available and sufficient for the task at hand, in order to minimize financial barriers to access that inhibit non-commercial academic use of the system by scholars and students. But very few people use only open-source software throughout the entire software stack. This is because open-source software that remains usable over the long term is not “free.” Someone has to be paid to maintain it and document it, thus open source alone does not ensure accessibility. A vast amount of open-source software ends up orphaned and unusable, as we have seen over and over again in digital humanities when a project’s funding runs out or its leaders retire, causing the website to go dark. This has led academic funding agencies to question whether it is the best use of their resources to pay for large numbers of boutique software applications that end up being unsustainable, whether open source or not.

In the case of OCHRE, the cost of licensing proprietary software from commercial vendors is borne by the University of Chicago for the benefit of its own faculty’s research. This benefit is extended to non-Chicago scholars at a minimal cost due to the economies of scale engendered by sharing a common platform with a single code base. Scholars use this shared platform free-of-charge, although they may be asked to pay a modest fee or apply for a grant to help cover the cost of data conversion, data hosting, and user training for their own projects. The permanent full-time staff of the Forum for Digital Culture maintain the OCHRE platform and facilitate its use over the long term.

Acknowledgments and Further Reading

The OCHRE platform was designed by David Schloen and Sandra Schloen, each of whom has a degree in computer science from the University of Toronto and professional experience in software engineering. The richly featured Java application that powers the back end and provides a user interface for building content in the core database was written by Sandra Schloen, who oversees the technical implementation of all aspects of the platform.

The ontological structure of the OCHRE database in terms of recursively nested item-attribute-value triple statements about basic categories of entities (persons, spatial units, temporal units, attributes, values, etc.) was conceived in the early 1990s and implemented in a relational database under a different name: Integrated Facility for Research in Archaeology (INFRA), for which the software was also written by Sandra Schloen. This was done before XML and RDF were invented. After those data standards were introduced by the World Wide Web Consortium (W3C) in the late 1990s, they were used to express the OCHRE ontology and to build its semistructured graph database.

As soon as XML 1.0 became a W3C recommendation in February 1998, development of the XML schemas for the OCHRE database began and the system became operational in 2001 using an early version of XQuery and Tamino XML Server, even though XQuery 1.0 did not become an official W3C recommendation until January 2007. OCHRE has undergone continuous development and enhancement for more than 20 years and has been tested with many different use cases at the University of Chicago and elsewhere.

Development and testing of some of the front-end features of the OCHRE platform was done with support from a $1.75-million Scientific Software Integration grant from the U.S. National Science Foundation’s Office of Advanced Cyberinfrastructure (award no. 1450455). Additional funding for research projects to use and test the OCHRE platform has come from the Neubauer Collegium for Culture and Society of the University of Chicago, the Social Sciences and Humanities Research Council of Canada, the National Endowment for the Humanities, and private donors.

See the OCHRE Wiki for details about how OCHRE is used in practice. The Wiki contains the latest information about acquiring, integrating, analyzing, and publishing a project’s data.

For additional material on OCHRE and its theoretical rationale, open the “toggle” sections below or click the “OCHRE Database” and “OCHRE Ontology” buttons at the bottom of the page. See also the following publications:

“Beyond Gutenberg: Transcending the Document Paradigm in Digital Humanities,” by J. David Schloen and Sandra R. Schloen, Digital Humanities Quarterly vol. 8, no. 4 (2014)

Invisible

Your content goes here. Edit or remove this text inline or in the module Content settings. You can also style every aspect of this content in the module Design settings and even apply custom CSS to this text in the module Advanced settings.

OCHRE Embraces Multiple Ontologies

OCHRE was initially developed for use in archaeology and philology. These fields of research have widely shared empirical methods but they are characterized by a high degree of ontological diversity, such that similar phenomena are described in different ways by different researchers. In fact, ontological diversity is characteristic of almost all fields of study in the humanities and social sciences and is found in varying degrees even in the natural sciences. (An “ontology,” in the sense intended here, is a formal specification of the concepts and relations in a given domain of knowledge. A hierarchical taxonomy is a common, and relatively simple, kind of ontology. See the OCHRE Ontology page of this website for a more detailed discussion of ontologies and the philosophical questions they raise.)

For this reason, computational tools for working with the vast body of scholarly knowledge now expressed in digital form must cope with the reality that such knowledge has been recorded by many different people using divergent ontologies. Each ontology reflects the nomenclature and conceptual distinctions relevant to a particular research community, and perhaps also reflects the idiosyncratic views of an individual researcher. No single ontology, no matter how complex and ramified, will be suitable for all purposes. There is an endless array of conceptual possibilities depending on the subject matter and the questions being asked, not to mention the linguistic traditions and historically situated perspectives of the scholars involved.

It is important to remember that ontological diversity is not a problem in itself. Indeed, it is inherent in the practice of research because different ontologies reflect different interpretive frameworks and research agendas; they are not just the result of sloppy thinking or individual quirks and egotism. Ontological diversity is not a vice to be eliminated, in a misguided attempt to standardize human ways of knowing, but rather a defining virtue of critically minded communities of thought that are open to multiple perspectives.

The practices of digital knowledge representation that emerged in large governmental and business organizations suppress ontological diversity. This reflects the fact that these are hierarchical organizations with central semantic authorities that mandate standard ontologies to be used throughout the organization. Unfortunately, these diversity-suppressing digital practices have permeated academic research, even though most scholars lack a central semantic authority, especially in the humanities. But since the vast majority of software development is done within and for governmental and business organizations, it is not surprising that most designers of information storage and retrieval systems assume without question that ontological standards are necessary, even in academic settings. These standards are typically expressed as a single prescribed database schema for each predetermined class of structured data, or perhaps as a set of prescribed markup tags for natural-language texts of a given type.

Ontological prescriptions of this sort cause problems for researchers because they force them to adopt standard ontologies that employ terms and distinctions which may not be suitable for their own work. On the other hand, allowing people to use their own ontologies inhibits automated integration and comparison of data among research projects. For this reason, a mechanism for automated querying and comparison that spans multiple ontologies is required. What is needed is database software that does not suppress ontological diversity via forced standardization but instead embraces it, while also facilitating semantic data integration across ontological boundaries. Data integration can be achieved in the face of diversity by making it easy for scholars to create semantic mappings from one ontology to another — not through additional software coding, which is prohibitively expensive, but by letting them add thesaurus relationships between the taxonomic terms of different ontologies within an intuitive user interface, perhaps with the assistance of AI (deep learning) tools. The querying software can then use these thesaurus relationships to do automatic query expansion, retrieving semantically comparable information from many projects at once.

This is what OCHRE was designed to do. It was engineered from the outset to respect the deeply rooted practices of semantic autonomy in the humanities by directly modeling each project’s own terminology and conceptual distinctions, avoiding any attempt forcibly to standardize ontologies across multiple projects, while still permitting semantic mappings across projects for large-scale querying and analysis. OCHRE thereby upholds the hermeneutical principle that meaning depends on context. In our view, software for digital humanities should acknowledge this hermeneutical principle and support the demand of modern scholars for the freedom to describe phenomena of interest in the light of their own critical judgments without being forced to conform to someone else’s ontology due to its being inscribed in the very structure of the computer system.

OCHRE achieves this goal by means of a foundational ontology (meta-ontology) defined in terms of very basic conceptual categories such as space, time, agency, and discourse. This ontology is implemented in the logical schema of a semistructured graph database, which can thus model any local or project-specific ontology within a universal ontological structure. As a result, OCHRE is very flexible and customizable. It does not force researchers to conform to a predetermined recording system but lets them use their own terminologies and conceptual distinctions. And it does so while providing powerful mechanisms for ingesting and integrating existing data; for querying and analyzing data; and for publishing and archiving a project’s data in an open, standards-compliant fashion.

OCHRE Uses Recursion to Model Space, Time, Language, and Taxonomy

Archaeologists and art historians study the material traces of human cultures. Philologists and literary critics study the historical development and interconnections of languages, literatures, and systems of writing. Linguists and philosophers study human linguistic capacities and the structure of language in general. All these disciplines exhibit, not just ontological diversity, but a high proportion of relatively unstructured and semistructured information in the form of qualitative descriptions and natural-language texts. This kind of information is best represented digitally by means of open-ended hierarchies (trees) of recursively nested entities rather than by means of rigid tables that have one row for each entity and a predetermined column for each property of the entities represented in the table.

Archaeology and philology also entail close attention to geographical and chronological variations in the phenomena being studied. And when dealing with the spatial and temporal relations among entities, researchers need mechanisms for representing not just absolute locations in space and time, in terms of numeric map coordinates and calendar dates, but also the relative placement of spatial objects or temporal events with respect to other spatial and temporal phenomena. This, too, is best accomplished computationally by representing spatial units of observation and temporal periods by means of open-ended hierarchies of recursively nested entities of the same kind — spatial or temporal, as the case may be — with the same structures at each level of the hierarchy regardless of scale, allowing the use of powerful recursive programming techniques to search and analyze the hierarchies.

Large collaborative projects in archaeology and philology have been test beds for OCHRE and provide examples of its use. But we have found that software methods developed to deal with the spatial, temporal, linguistic, and taxonomic complexity of archaeological and philological data are applicable to a much wider range of research. This is so because the OCHRE software is based on powerful conceptual abstractions expressed in an innovative database structure characterized by overlapping recursive hierarchies of highly atomized entities. OCHRE’s hierarchical and recursive data model can flexibly represent scholarly knowledge of all kinds without sacrificing the power of modern databases because it is implemented, not in an unconstrained web of knowledge that is difficult to search efficiently, as is common in simpler graph databases, but by means of well-indexed and atomized database items that conform to a predictable hierarchical schema, thus enabling semantically rich and efficient queries. Accordingly, OCHRE is now being used, not just in archaeology and philology, but in many other areas of the humanities and social sciences, and also in branches of the natural sciences where spatial, temporal, and taxonomic variation are key concerns, such as population genetics (comparing ancient and modern DNA), paleoclimatology, and other kinds of environmental research.

OCHRE Ingests and Manages All Kinds of Research Data

OCHRE supports a wide range of digital formats and data types: textual, numeric, visual, sonic, geospatial, etc. A project’s textual and numeric data are ingested into the OCHRE database, where they are atomized and manipulated as individual keyed-and-indexed database items. OCHRE can automatically import textual and numeric data stored in comma-separated value files (CSV), Excel spreadsheets (XLSX), Word documents (DOCX), and other XML documents (e.g., digital texts in the TEI-XML format).

In contrast, a project’s 2D images, 3D models, GIS mapping data, PDF files, and audio/video clips are not stored directly in the central OCHRE database but are catalogued in the central database as “external resources” to be fetched as needed from external HTTP or FTP servers. External resources are linked to keyed-and-indexed items in the central database via their URLs and are fetched as needed and displayed seamlessly together with a project’s textual and numeric data, which is stored internally in the database. Items in the database can be linked to specific locations within an image or other resource (e.g., a pixel region in a photograph or a page in multi-page document).

OCHRE uses ArcGIS Online and the ArcGIS Runtime SDK (embeddable software components) to provide a powerful mapping and spatial analysis capability that is tightly integrated with other data. This is especially important for archaeology but is necessary also for many other kinds of research. Spatial-containment relationships and chronological systems of temporal relationships (periods and sub-periods) can be represented in a way that makes it easy to work with both relative and absolute dates and lets users visualize temporal sequences via graphical timelines. More generally, relationships of all kinds — temporal, spatial, social, linguistic, etc. — can be modeled and visualized as node-arc network graphs and can be used in database queries that incorporate the extrinsic relationships among entities as well as their intrinsic properties.

OCHRE Has Advanced Capabilities for Textual Research

For textual projects, OCHRE has sophisticated capabilities for representing texts written in any language and writing system, modern or ancient. The epigraphic (physical) and discursive (linguistic) dimensions of a text are carefully distinguished in OCHRE as separate recursive hierarchies linked to one another by cross-hierarchy relations between epigraphic units (physical marks of inscription) and the discourse units (linguistic meanings) that a reader recognizes when reading the text. This distinction between the epigraphic and discursive dimensions of a text is necessary for many kinds of scholarly analysis but is muddled in the Text Encoding Initiative (TEI) encoding scheme, for example.

Moreover, in the OCHRE database the writing system itself is represented separately from texts that use it to avoid confusion between the ideal signs of a writing system as understood abstractly and the physical instantiations of the signs as they appear in particular texts. Writing systems are represented as sets of ideal signs modeled separately from the epigraphic hierarchies of texts in which each sign is instantiated by some allograph or other of it. This is necessary when dealing with ancient logosyllabic writing systems such as Mesopotamian cuneiform or Egyptian hieroglyphs, whose signs have many possible phonetic values and allographic variants, and it is quite useful even when dealing with alphabetic writing systems, which often have allographic variations of scholarly interest across the texts in which they are instantiated.

Finally, in addition to distinguishing scholarly analyses of the epigraphic hierarchy of a text from analyses of its discourse hierarchy, and also distinguishing the signs of a writing system from the epigraphic units in which these signs are instantiated, OCHRE represents the lexicon of each language or dialect as a separate set of ideal lexical units contained in dictionary lemmas. The lexical units of a language are instantiated by the discourse units of texts written in that language. A word-level discourse unit is normally linked to the epigraphic units that were read to produce it and also to the particular grammatical form of the word within a dictionary lemma.

This allows the software to compile automatically for each lemma all the grammatical forms of the word and all the orthographic and allographic variations in the spelling of each grammatical form of the word, together with textual citations of the use of each form in context generated automatically from the texts in which they appear. OCHRE can thus generate from its database a dictionary view that looks like an OED-style corpus-based dictionary, constructed dynamically from the underlying text editions with no error-prone duplication of information. Text editions are closely interwoven with dictionaries, on the one hand, and with analyses of writing systems, on the other, making it easy to explore computationally the entire web of connections of interest to philology.

The value of the OCHRE data model for textual studies is illustrated by the multi-project Critical Editions for Digital Analysis and Research (CEDAR) initiative at the University of Chicago. The CEDAR projects are producing online critical editions of a wide range of culturally influential or “canonical” texts — ancient, medieval, and modern — written in diverse languages and writing systems and transmitted over long periods in multiple copies and translations. Textual variation in long-lasting textual traditions of this kind can be modeled computationally as a textual “space of possibilities” using OCHRE’s basic model of overlapping recursive hierarchies of entities with cross-hierarchy relations between entities in different hierarchies, which in this case are hierarchies of epigraphic units and discourse units.

In contrast, most software for digital humanities conflates the epigraphic and discursive dimensions of a text, which is usually represented by a single hierarchy of textual components, as in the Text Encoding Initiative (TEI) markup scheme. However, this yields an inadequate digital representation of the conceptual entities and relations that scholars employ when constructing critical editions. For more on this, see the 2014 article in Digital Humanities Quarterly entitled “Beyond Gutenberg: Transcending the Document Paradigm in Digital Humanities” by David Schloen and Sandra Schloen.

OCHRE Is Compatible with the Semantic Web and Linked Open Data

As was noted above, OCHRE is based on the open standards published by the World Wide Web Consortium (W3C), the organization responsible for the technical specifications of the Web itself and of the Semantic Web. OCHRE can expose and archive the data contained in its back-end database using the standard graph-data format of the Semantic Web, which is based on the W3C’s Resource Description Framework (RDF) and Web Ontology Language (OWL).

RDF represents knowledge in the form of subject-predicate-object “triples,” which constitute statements about entities. In terms of mathematical graph theory, a collection of RDF triple-statements is a labeled, directed graph. RDF triples can be queried using the W3C’s SPARQL querying language and can be easily imported into, or exported from, any graph database system that supports the Semantic Web standards.

RDF triples are well suited for the long-term archiving of OCHRE data in a standardized format that preserves all the conceptual distinctions and relationships projects have made when entering their data into the OCHRE database. RDF triples can be implemented in a number of different syntactical forms (e.g., in XML notation or Turtle notation) and do not depend on any particular software or operating system, so an RDF archive exported from the OCHRE database does not depend on the OCHRE software.

OCHRE can easily generate RDF triples in any notation that is desired because it stores data in a structurally identical way, as triple-statements about entities of interest, although in OCHRE these are called item-attribute-value triples rather than subject-predicate-object triples. OCHRE can also expose its data dynamically as SPARQL endpoints for other software to use. Thus OCHRE is fully compatible with the Semantic Web and the Linked Open Data approach that is based on the Semantic Web standards.

Click the buttons below for more information on these OCHRE topics: